Documenting AI-Enabled SaMD: What FDA’s Jan 2025 Draft Expects

- Beng Ee Lim

- Jun 3, 2025

- 3 min read

Updated: Sep 7, 2025

FDA’s Jan 7, 2025 draft for AI-enabled device software functions recommends including: model description, dataset lineage/partitions and representativeness, performance metrics tied to claims, bias analysis/mitigation, human-AI use, postmarket monitoring, and (if you’ll update) a PCCP. Package these in the Software (DSF) and related sections of eSTAR (510(k) now; De Novo eSTAR mandatory Oct 1, 2025)

Why AI/ML Documentation Matters Now

With AI-driven Software as a Medical Device (SaMD) becoming ubiquitous, the FDA’s January 2025 draft guidance emphasizes transparency and thoroughness – inadequate model details in late 2024 led to multiple submission holds . Proper documentation speeds reviews, reduces back-and-forth, and demonstrates safety, efficacy, and fairness.

Required Sections per January 2025 Draft

Model Overview & Intended Use

What to include:

Include the algorithm type (e.g., CNN, transformer) and an architecture diagram.

Describe the clinical purpose and risk classification (IMDRF category, FDA Class).

Provide a functional description of the Device Software Function (DSF).

Why: The FDA requires clarity on what the model does, who uses it, and the associated risk to properly assess safety and effectiveness.

Data Lineage & Training

What to include:

Detail source datasets including institutions, dates, and patient demographics.

Explain preprocessing steps such as normalization and augmentation.

Describe labeling methodology and quality control procedures.

Why: Demonstrates dataset provenance and reproducibility, which is critical for trustworthiness and regulatory review.

Performance Metrics & Validation

What to include:

Provide internal test results with metrics like ROC/AUC, sensitivity, specificity.

Include performance on at least one external validation dataset.

Present confusion matrices or equivalent tables.

Why: Ensures the model generalizes beyond development data and supports claims of safety and effectiveness.

Bias & Fairness Analysis

What to include:

Provide demographic breakdowns (age, sex, race) of test cohorts.

Compare performance metrics across these subgroups.

Describe mitigation strategies such as oversampling or algorithm adjustments.

Why: Addresses health equity concerns and regulatory scrutiny on bias in AI models.

Model Update Plan (PCCP)

What to include:

Specify trigger conditions for updates (e.g., data drift, performance drop below threshold).

Outline retraining frequency and validation procedures for each update.

Describe rollback mechanisms and monitoring approaches.

Why: FDA requires a PCCP for models that evolve post-market to ensure continued safety and effectiveness.

Explainability & Transparency

What to include:

Include visuals such as SHAP plots or Grad-CAM heatmaps highlighting feature importance.

Provide a concise “Explainability Summary” paragraph for clinicians.

Why: Helps reviewers and end users understand the model logic and builds trust.

Security & Privacy Controls

What to include:

Describe data de-identification methods, encryption at rest and in transit.

Detail access controls for model and data.

Why: Ensures compliance with FDA cybersecurity expectations and protects patient data and model integrity.

Best Practices & Pro Tips

Version Control for Data Lineage: Storing dataset versions and preprocessing scripts in Git or Git-LFS is a recognized best practice to ensure reproducibility and traceability of training data and transformations, which the FDA emphasizes for dataset provenance and reproducibility.

Automate Metric Tracking: Using tools like MLflow to log performance metrics (AUC, accuracy, etc.) for each training run supports robust validation and documentation. This aligns with FDA’s focus on comprehensive performance metrics and validation reporting.

Iterative Bias Testing: Performing demographic performance checks at multiple development milestones—not just pre-submission—addresses FDA concerns about bias and fairness throughout lifecycle management.

Embed Explainability Visuals in eSTAR: Including explainability visuals (e.g., SHAP, Grad-CAM) as attachments in the eSTAR submission PDF facilitates reviewer understanding and transparency, consistent with FDA’s explainability and transparency recommendations.

Leverage Pre-Sub Feedback: Engaging in FDA Pre-Submission meetings focused on AI documentation is strongly recommended to identify gaps early and align with FDA expectations, as highlighted in FDA’s SaMD Pre-Sub guidance and industry best practices.

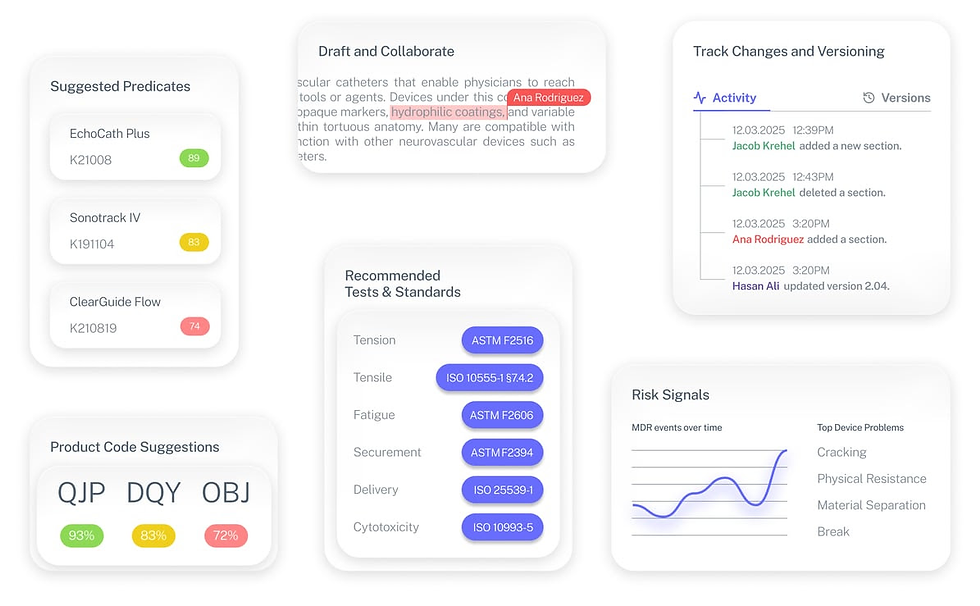

The Fastest Path to Market

No more guesswork. Move from research to a defendable FDA strategy, faster. Backed by FDA sources. Teams report 12 hours saved weekly.

FDA Product Code Finder, find your code in minutes.

510(k) Predicate Intelligence, see likely predicates with 510(k) links.

Risk and Recalls, scan MAUDE and recall patterns.

FDA Tests and Standards, map required tests from your code.

Regulatory Strategy Workspace, pull it into a defendable plan.

👉 Start free at complizen.ai

FAQ

Do I need to include external validation results?

Yes. FDA expects internal and at least one external dataset to demonstrate generalizability.

How detailed should my PCCP be?

Include retrain triggers, validation methods, and rollback criteria.

Is SHAP acceptable for explainability?

Yes. SHAP, Grad-CAM, or similar tools are preferred for feature attribution.