Top Reasons SaMD 510(k)s Get AI Letters (and How to Fix Them)

- Beng Ee Lim

- Jun 24, 2025

- 7 min read

Updated: Sep 7, 2025

Most SaMD 510(k)s hit a first-cycle Additional Information (AI) letter, not a final rejection. The recurring issues: missing cybersecurity materials (for §524B cyber devices), thin AI/ML evidence (data lineage, generalizability, validation), and weak predicate comparisons. Fix them by aligning to FDA’s DSF software guidance, 2025 AI draft, and 2025 cyber final—organized in eSTAR.

In this article we'll break down FDA requirements and the complex nature of software validation, and the best practices to ensure 510(K) success for SaMD.

At a Glance: SaMD 510(k) Rejection Landscape

Top Rejection Category | Impact Level | 2025 Enforcement |

Cybersecurity Gaps | Critical | Mandatory since Oct 2023 |

AI/ML Validation Issues | High | Enhanced scrutiny |

Predicate Equivalence Problems | Critical | Ongoing challenge |

Documentation Inconsistencies | Medium | Standard enforcement |

Clinical Validation Gaps | Medium | Increasing focus |

The Current SaMD Rejection Reality

The FDA cleared 691 AI/ML-enabled medical devices as of October 2023, with the majority going through the 510(k) pathway. However, rejection rates remain concerningly high due to the unique challenges software presents compared to traditional hardware devices.

Key Industry Context:

Over 37% of cleared AI/ML devices originated from non-AI/ML predicates, creating substantial equivalence challenges

Early FDA summaries called out “AI” in only 45 % of approvals, fueling classification confusion.

Nearly half of FDA-approved AI devices lack robust clinical validation data

1. Cybersecurity and SBOM Compliance Failures

The New Reality

FDA began refusing to accept any cyber-device submission that lacks a full SBOM, threat model or patch plan on 1 Oct 2023. As a result, cyber gaps are now the most common automatic RTA trigger for connected SaMD files.

Most Common Cybersecurity Rejection Reasons:

Missing Software Bill of Materials (SBOM)

Incomplete inventory of software components

Failure to identify third-party and open-source components

Missing version numbers and vulnerability assessments

Inadequate Cybersecurity Risk Assessment

Submission of plans outlining how the company will track and address cybersecurity issues that occur after the device is on the market not provided

Insufficient threat modeling for "cyber devices"

Missing penetration testing results

Post-Market Monitoring Plans

No defined process for vulnerability tracking

Unclear incident response procedures

Missing update and patch deployment strategies

2. AI/ML Validation and Predicate Equivalence Issues

The Predicate Problem

More than a third of cleared AI/ML-based medical devices originated from non-AI/ML-based medical devices in the first generation, creating substantial equivalence challenges that frequently lead to rejections.

Common AI/ML Rejection Patterns:

Inappropriate Predicate Selection

Claiming equivalence to non-AI devices for AI-enabled functions

Devices with the longest time since the last predicate device with an AI/ML component were haematology (2001), radiology (2001), and cardiovascular devices (2008).

Mismatched intended use statements between AI and non-AI predicates.

Insufficient Algorithm Validation

Missing training data demographics and bias assessment

Inadequate performance testing across diverse populations

Clinical Study Participants and Data Sets Are Representative of the Intended Patient Population not demonstrated

Algorithm Change Control Issues

No predetermined change control plan (PCCP) for continuous learning algorithms

Unclear retraining triggers and validation processes

Missing performance monitoring metrics

3. Documentation Level Misalignment

The Two-Tier Challenge

The FDA uses a risk-based approach to determine the level of detail required in a software medical device's documentation. This approach ensures that devices with higher risks (Enhanced) undergo more scrutiny, while low-risk (Basic) devices can provide less documentation.

Common Documentation Failures:

Wrong Documentation Level Selection

Choosing Basic when Enhanced is required

Insufficient risk assessment to justify documentation level

Missing Software Design Specification (SDS) for Enhanced submissions

Architecture and Design Gaps

Enhanced: Provide layered diagrams with details on: Interfaces and interdependencies between software modules. Data inputs, outputs, and flow paths. Cybersecurity architecture and measures to mitigate risks not provided

Inadequate software architecture documentation

Missing data flow and security diagrams

Traceability Issues

Poor linkage between requirements, design, and testing

Missing risk-to-control traceability

Inadequate change management documentation

Pro tip: If your SaMD touches patients directly, uses AI/ML, connects to the cloud, or forms part of a combination product, assume Enhanced is mandatory—and build the SDS, architecture, and traceability matrix before you draft the 510(k).

4. Clinical Validation and Real-World Evidence Gaps

The Clinical Data Challenge

Almost half of FDA-approved medical AI devices lack clinical validation data, yet the FDA is increasingly demanding robust clinical evidence for SaMD submissions.

Clinical Validation Rejection Reasons:

Insufficient Clinical Evidence

Missing prospective clinical studies

Retrospective data without appropriate controls

Performance metrics not clinically meaningful

Population Representation Issues

Only 14.5% of devices provided race or ethnicity data

Inadequate demographic diversity in training/testing datasets

Missing pediatric considerations where applicable

Performance Metric Problems

Using technical metrics instead of clinical outcomes

Missing sensitivity/specificity in real-world conditions

Inadequate comparison to clinical standard of care

Bottom line: pair solid demographics with prospective, clinically relevant studies—and lock them to a robust PCCP—if you want your AI 510(k) to survive first review.

5. Indication for Use and Labeling Inconsistencies

The Consistency Problem

This is one of the biggest reasons FDA rejects 510(k) submissions - inconsistent Indication for Use (IFU) statements appearing throughout the submission.

Common IFU-Related Rejections:

Software-Specific Labeling Issues

Unclear software function descriptions

Missing AI/ML algorithm limitations

Inadequate user interface specifications

Clinical Decision Support vs. Diagnostic Claims

Confusion between CDS and CADx (Computer-Aided Diagnosis) devices

Inappropriate diagnostic claims for decision support tools

Missing physician oversight requirements

Performance Claims Misalignment

Laboratory performance vs. clinical performance confusion

Overstated algorithm capabilities

Missing performance limitations and warnings

Fix it: copy-paste your IFU verbatim into every section, keep claims inside proven performance, and use FDA’s CDS flowchart if there’s any doubt about diagnostic language.

6. Software Lifecycle and Quality Management Deficiencies

The Process Problem

Good Software Engineering and Security Practices Are Implemented - FDA increasingly scrutinizes software development processes for SaMD submissions.

Process-Related Rejection Reasons:

IEC 62304 Compliance Gaps

Missing software lifecycle process documentation

Inadequate software safety classification

Poor change control procedures

Design Controls Deficiencies

Missing Design History File (DHF) elements

Inadequate software requirements specifications

Poor verification and validation documentation

Risk Management Issues

ISO 14971 implementation gaps

Missing software-specific hazard analysis

Inadequate risk control effectiveness verification

Pro tip: assume reviewers will ask to see your IEC 62304 mapping table, risk file, and change-control SOP—have them ready before you click “Submit.”

Emerging Rejection Trends in 2025

1. Enhanced AI/ML Scrutiny

Jan 2025 draft guidance demands a total-product-lifecycle plan, algorithm-transparency docs and a rock-solid continuous-monitoring / PCCP. Miss any one and reviewers bounce the file.

2. Interoperability and Integration Issues

With FDA exploring FHIR-based study data, SaMD files now get quizzed on FHIR mapping, EHR-integration test results and API security hardening. Treat this as the next cyber-checklist item.

3. Human Factors Engineering Gaps

Submissions lacking UI usability studies, error-mitigation analysis or workflow fit run afoul of FDA’s HF/UE guidance.

Take-away: 2025 reviewers expect SaMD 510(k)s to show airtight AI lifecycle control, speak FHIR when data cross systems, and prove a clinician can’t click the wrong button in real life. Shore up those three areas before you press “submit.”

Industry-Specific Rejection Patterns

Radiology SaMD (Highest Volume, Highest Scrutiny)

Most Common Issue: AI/ML tasks changed frequently along the device's predicate network, raising safety concerns

Key Challenge: Demonstrating equivalence when moving from image processing to AI interpretation

Frequent Rejection: Inadequate DICOM compliance and integration testing

Cardiovascular SaMD

Primary Issue: Clinical validation in diverse patient populations

Common Gap: Missing real-world performance data

Frequent Problem: Inadequate rhythm analysis validation

Digital Therapeutics

Main Challenge: Establishing appropriate predicate devices

Key Issue: Clinical efficacy demonstration

Common Failure: Missing behavioral outcome validation

Prevention Strategies: Learning from Rejections

1 — Start with a Pre-Sub

Book a Q-Submission 4-12 months before you file. Bring your predicate logic and a high-level cyber-architecture diagram so FDA can spot gaps early.

2 — Match docs to risk

Use the 2023 Software-Content guidance: Basic vs Enhanced documentation hinges on patient risk. When unsure, over-deliver—Enhanced needs an SDS, layered architecture, and full traceability.

3 — Build security in

Create the SBOM as you code, run routine pentests, and draft a post-market patch plan; §524B lets FDA refuse “cyber-devices” missing these items.

4 — Follow GMLP for AI/ML

Adopt FDA’s 10 Good-ML principles, include a Predetermined Change Control Plan, and show bias-checked, diverse training data.

5 — Prove it clinically

Design prospective, multi-site studies with meaningful endpoints and run real-world validation in the intended workflow.

Lock these five habits in, and most “automatic” RTA triggers evaporate before your SaMD dossier even hits FDA’s inbox.

Key Takeaways for SaMD Companies

The rejection landscape for SaMD 510(k)s is evolving rapidly, with cybersecurity and AI/ML validation issues dominating recent patterns. Companies that proactively address these challenges through early FDA engagement, robust technical documentation, and comprehensive validation strategies significantly improve their approval odds.

Critical Success Factors:

Cybersecurity compliance is non-negotiable - ensure SBOM and post-market plans are comprehensive

Predicate strategy requires careful consideration - non-AI predicates create substantial equivalence challenges

Clinical validation is increasingly important - retrospective data alone is often insufficient

Documentation consistency matters - automated checking can prevent common IFU errors

Early FDA engagement pays dividends - Q-Sub meetings help identify potential issues before submission

The Bottom Line: SaMD rejections are largely preventable with proper preparation, but the bar continues to rise. Companies that invest in comprehensive regulatory strategy and leverage modern compliance tools will have significant advantages in this competitive landscape.

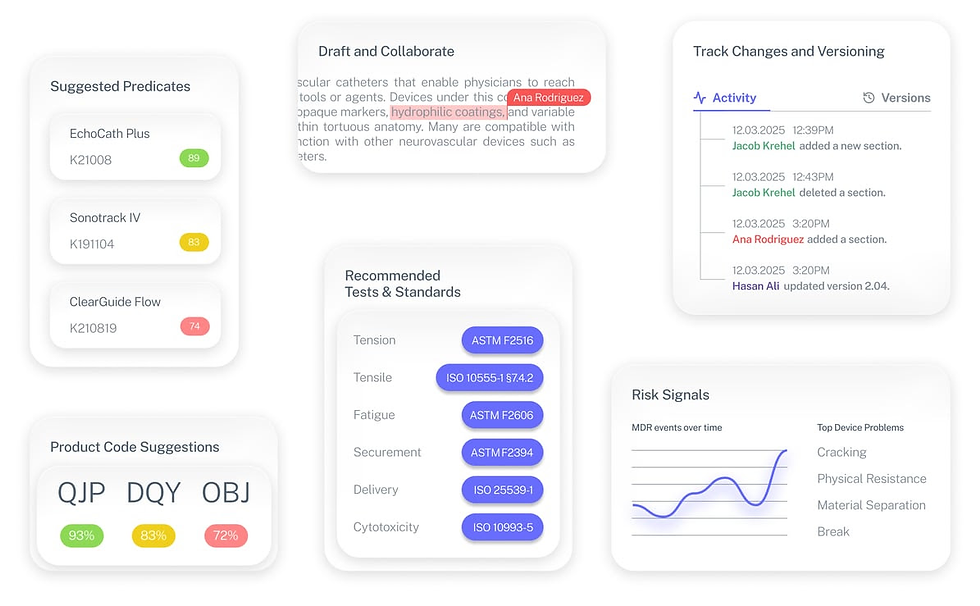

The Fastest Path to Market

No more guesswork. Move from research to a defendable FDA strategy, faster. Backed by FDA sources. Teams report 12 hours saved weekly.

FDA Product Code Finder, find your code in minutes.

510(k) Predicate Intelligence, see likely predicates with 510(k) links.

Risk and Recalls, scan MAUDE and recall patterns.

FDA Tests and Standards, map required tests from your code.

Regulatory Strategy Workspace, pull it into a defendable plan.

👉 Start free at complizen.ai

Frequently Asked Questions

Q: What's the current rejection rate for SaMD 510(k) submissions?

A: While specific SaMD rejection rates aren't publicly disclosed, industry experts estimate 60-75% of first-time SaMD submissions receive major deficiency letters or rejections, similar to overall 510(k) rates.

Q: How has cybersecurity enforcement changed rejection patterns?

A: Since October 2023, cybersecurity deficiencies have become the leading cause of immediate RTA decisions, with incomplete SBOMs and missing post-market monitoring plans being primary issues.

Q: Can AI/ML devices use non-AI predicates for 510(k) clearance?

A: Yes, but this approach is increasingly challenging. The FDA scrutinizes whether AI components represent "different technological characteristics" that could affect safety and effectiveness.

Q: What's the biggest mistake SaMD companies make in 510(k) submissions?

A: Underestimating the documentation requirements and failing to engage with FDA early in the development process. Most successful SaMD companies schedule Q-Sub meetings well before submission.

Q: How long do SaMD 510(k) reviews typically take?

A: Standard 510(k) reviews target 90 days, but SaMD submissions often require additional information requests, extending timelines to 4-8 months for complex AI/ML devices.

Stay ahead of evolving SaMD regulations with Complizen's AI-powered compliance platform. Our regulatory intelligence system helps medical device companies navigate complex requirements and avoid common submission pitfalls.